In the previous blog post, I discussed strategies for elevating your Python skills, including,

- Logging and Instrumentation

- Testing

In the current article I will cover the following topics

- Documentation

- Version control and Code review

Python Documentation: Best Practices and Examples

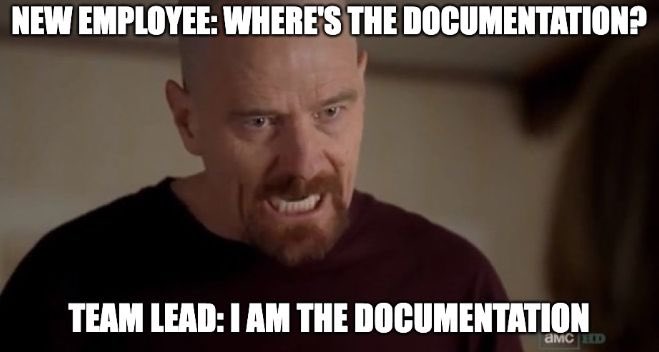

Documentation is an important aspect of software development, as it helps other developers understand the purpose and use of the code. In Python, there are several ways to include documentation in your code, including comments, doc strings, and project documentation.

Comments

Comments are lines of code that are not executed, but rather provide information about the code. They are useful for explaining the purpose of the code and how it works. In Python, comments start with the # symbol.

For example:

# This is a comment

# This line of code calculates the square of a number

x = 4

y = x**2

print(y) # Output: 16

There are several types of comments that you can use in Python:

- Single-line comments, as shown in the example above, are used for short explanations or notes about a single line of code.

- Multiline comments, also known as block comments, are used for longer explanations that span multiple lines. They are denoted by triple quotes (

'''or""").

'''

This is a multiline comment.

It can be used to provide a detailed explanation of the code,

or to include examples or notes that are too long to fit in a single-line comment.

'''

It is good practice to use comments liberally in your code to provide explanations and context. However, you should be careful not to overuse comments, as excessive commenting can make the code harder to read.

Doc Strings

Doc strings, short for documentation strings, are special comments that provide documentation for Python functions, methods, and modules. They are denoted by triple quotes (''' or """) and are the first statement in the code block.

For example:

def square(x):

'''

Calculates the square of a number.

Parameters:

x (int or float): The number to be squared.

Returns:

int or float: The square of x.

'''

return x**2

Doc strings follow a specific format, with the first line providing a brief overview of the function, followed by a more detailed explanation in the following lines. The parameters and return values of the function should also be included in the doc string.

In addition to providing documentation for other developers, doc strings can also be accessed at runtime using the doc attribute. For example:

>>> square.__doc__

'Calculates the square of a number.\n\n Parameters:\n x (int or float): The number to be squared.\n \n Returns:\n int or float: The square of x.'

Doc strings are a powerful tool for documenting your code, and can be accessed and used by various tools, such as IDEs (Integrated Development Environments) and documentation generators.

To learn more about docstrings, see Example Google Style Python Docstrings.

Project Documentation

In addition to including documentation in your code, it is also important to provide documentation for the overall project. This can include information about the purpose and goals of the project, the required dependencies, installation instructions, and usage examples.

There are several ways to include project documentation:

-

README file: A README file is a common way to provide project documentation. It is usually placed in the root directory of the project and should include a brief overview of the project, as well as any necessary information for setup and usage. Here is an example of a simple README file:

# Project Name A brief description of the project ## Requirements - List of required libraries or dependencies ## Installation Instructions for installing the project ## Usage Examples of how to use the project ## Contributing Information about how to contribute to the projectIf you are hosting your project on GitHub, you can include a README file in the root directory of your repository. GitHub will automatically display the contents of the README file on the repository page, making it easy for users to find and access the documentation.

-

Documentation generators: There are various tools available for generating documentation from your code and project documentation. Some popular options include Sphinx and Doxygen. These tools can generate HTML or PDF documentation from your code, doc strings, and project documentation, providing a more organized and visually appealing way to document your project.

It is important to include thorough and up-to-date documentation for your project, as it helps other developers understand and use your code. Make sure to include any necessary information for setup and usage, as well as examples and explanations of the code.

By including comments, doc strings, and project documentation in your code, you can help other developers understand and use your code more easily. Don’t forget to regularly update and maintain your documentation to ensure it stays accurate and useful.

Python Version Control and Code Review Best Practices for Data Science Projects

As a data scientist, you may be familiar with version control and code review practices, but it is always good to review and refresh your knowledge. In this article, we will cover why version control is important, the different types of version control systems, and how to version control Jupyter notebooks and production machine learning models. We will also discuss code review best practices and the benefits of code review for data science projects.

Why Version Control is Needed

Version control is a system that tracks and manages changes to your code and other project files. It is particularly useful for data science projects, as it allows you to track changes to your code and data, as well as collaborate with other team members.

Here are some key benefits of using version control:

- Track changes: Version control allows you to see the history of changes to your code and data, so you can track the progress of your project and revert to previous versions if necessary.

- Collaborate with team members: Version control makes it easy for multiple team members to work on the same project, as it allows them to share and merge their changes without overwriting each other’s work.

- Maintain a clean project directory: With version control, you can keep your project directory clean and organized, as you can store large data files or intermediate results in a separate repository.

- Ensure reproducibility: By tracking changes to your code and data, you can ensure that your results are reproducible, as you can always recreate the exact version of the code and data used to generate the results.

Version Control Jupyter Notebooks

Jupyter notebooks are a popular tool for data science projects, as they allow you to combine code, text, and visuals in a single document. However, version controlling Jupyter notebooks can be tricky, as they contain both code and output.

One way to version control Jupyter notebooks is to use the nbconvert command to convert the notebooks to a format that is easier to version control, such as Markdown or Python scripts. For example:

jupyter nbconvert --to script notebook.ipynb

This will convert the notebook to a Python script, which can be version controlled using Git or other version control systems.

You can also use tools such as Jupytext to automate the conversion and synchronization of Jupyter notebooks and plain text files.

Version Control Production Machine Learning Models

In addition to version controlling your code and data, it is also important to version control your production machine learning models. This ensures that you can track and reproduce the exact version of the model that is deployed in production.

Here are some best practices for version controlling production machine learning models:

- Store model artifacts: Model artifacts are files that are generated during the training process, such as model weights, configuration files, and preprocessing scripts. It is important to store these artifacts in the version control system, as they are necessary to reproduce the model.

- Use tags to identify production models: Use tags to mark specific versions of the model as production models. This makes it easy to identify and deploy the correct version of the model.

- Store model metadata: Model metadata, such as the version of the model, the training data used, and the training parameters, is important for understanding the performance and behavior of the model. Store this metadata in the version control system, as well as in a separate database or metadata store.

- Automate model deployment: Use a continuous integration and deployment (CI/CD) pipeline to automate the deployment of models to production. This ensures that the correct version of the model is deployed, and reduces the risk of human error.

Code Review Best Practices

Code review is the process of having other developers review your code to ensure that it meets certain standards and is of high quality. It is an important best practice in data science projects, as it can help catch errors and improve the overall quality of the code.

Here are some best practices for code review:

- Involve multiple reviewers: Involve multiple reviewers in the code review process, as different reviewers may catch different issues.

- Focus on code quality: The main goal of code review is to improve the quality of the code, so focus on issues such as readability, maintainability, and performance.

- Be respectful and constructive: Code review can be a sensitive topic, as it involves critiques of someone’s work. It is important to be respectful and constructive in your feedback, and to avoid personal attacks or criticism.

- Use code review tools: There are various code review tools available, such as GitHub Pull Requests and Gerrit. These tools provide an interface for reviewing code, as well as features such as commenting, inline suggestion, and voting.

- Establish code review guidelines: Establish guidelines for code review in your team, such as the scope of the review (e.g., code, tests, documentation), the level of detail, and the review process (e.g., who should review the code, how many reviewers should be involved).

- Include testing in the review process: Testing is an important aspect of data science projects, and should be included in the code review process. Reviewers should check that the code is properly tested and that the tests are effective.

Code review is beneficial for the reviewer, the reviewee, and the team as a whole. It promotes best programming practices, catches errors, and improves the overall quality of the code. By following these best practices, you can ensure that your code review process is effective and efficient.

In conclusion, proper documentation, version control, and code review are essential best practices in data science projects, as they help ensure the quality and maintainability of the code. Documentation, including comments, doc strings, and project documentation, provides information about the code and its purpose. Version control systems, such as Git, track and manage changes to the code and allow for collaboration with team members. Code review involves having other developers review the code to ensure that it meets certain standards and is of high quality. By following these best practices, you can improve the reliability and reproducibility of your data science projects and promote best programming practices within your team.